2024/04/07

反正是要学一些API的,不如直接从例子里面学习怎么使用API,这样同时可以复习一下一些基本的机器学习知识。但是一开始开始和以前一样,先直接讲类和常用函数用法,然后举例子。?

这里主要是各种优化器,以及使用。因为大多数机器学习任务就是最小化损失,在损失定义的情况下,后面的工作就交给优化器啦?

https://www.tensorflow.org/versions/r0.11/api_docs/python/train.html?

从其中讲几个比较常用的,其他的可以自己去看文档。?

类:

Optimizer?

GradientDescentOptimizer?

AdagradOptimizer?

AdagradDAOptimizer?

MomentumOptimizer?

AdamOptimizer?

FtrlOptimizer?

RMSPropOptimizer

优化器(optimizers)类的基类。这个类定义了在训练模型的时候添加一个操作的API。你基本上不会直接使用这个类,但是你会用到他的子类比如,?,?.等等这些。

这里讲一个大致的使用流程?

(可以和后面提供的线性回归例子对比加深理解)

在训练部分中,你只要run那个更新的操作就行了(比如这里是minimize函数返回的这个opt_op)

以上就是最基本的的使用框架了,思想其实是很简单的

Processing gradients before applying them.

Calling minimize() takes care of both computing the gradients and applying them to the variables. If you want to process the gradients before applying them you can instead use the optimizer in three steps:

Compute the gradients with compute_gradients().?

Process the gradients as you wish.?

Apply the processed gradients with apply_gradients().?

Example:

重要函数:?

tf.train.Optimizer.(use_locking, name)

作用:创建一个新的优化器(optimizer),必须通过子类调用,事实上,我们也不会使用这个。?

参数:?

use_locking:?Bool. If True apply use locks to prevent concurrent updates to variables.?

name:?A non-empty string. The name to use for accumulators created for the optimizer.

tf.train.Optimizer.(loss, global_step=None, var_list=None, gate_gradients=1, aggregation_method=None, colocate_gradients_with_ops=False, name=None, grad_loss=None)

作用:添加操作通过更新变量列表消化损失(loss).这个函数结合了调用?和?这两个函数,如果你想在应用梯度之前计算出来,那么就调用?然后再自己显式的使用?

参数:?

loss:?待小化的值(类型是tesnor)?

global_step:?Optional Variable to increment by one after the variables have been updated.?

var_list:?[可选]待更新小化损失的变量列表。默认是图里面?下的变量的列表。?

gate_gradients:?How to gate the computation of gradients. Can be GATE_NONE, GATE_OP, or GATE_GRAPH.?

aggregation_method: Specifies the method used to combine gradient terms. Valid values are defined in the class AggregationMethod.?

colocate_gradients_with_ops: If True, try colocating gradients with the corresponding op.?

name:?Optional name for the returned operation.?

grad_loss:?Optional. A Tensor holding the gradient computed for loss.?

返回:?

An Operation that updates the variables in var_list. If global_step was not None, that operation also increments global_step.?

Raises:?

ValueError: If some of the variables are not Variable objects.

tf.train.Optimizer.(loss, var_list=None, gate_gradients=1, aggregation_method=None, colocate_gradients_with_ops=False, grad_loss=None)

作用:计算损失函数对于各个变量列表中各个变量的梯度。?的第一部分就是计算梯度的这个函数。返回的是一个对的列表当然对于某个给定的变量要是没有梯度的话,就是?。?

参数:?

loss:?待小化的值(类型是tesnor)?

var_list:?[可选]待更新小化损失的变量列表。默认是图里面?下的变量的列表。?

gate_gradients:?How to gate the computation of gradients. Can be GATE_NONE, GATE_OP, or GATE_GRAPH.?

aggregation_method:?Specifies the method used to combine gradient terms. Valid values are defined in the class AggregationMethod.?

colocate_gradients_with_ops: If True, try colocating gradients with the corresponding op.?

grad_loss:?Optional. A Tensor holding the gradient computed for loss.

tf.train.Optimizer.apply_gradients(grads_and_vars, global_step=None, name=None)

Apply gradients to variables.This is the second part of minimize(). It returns an Operation that applies gradients.?

Args:?

grads_and_vars: List of (gradient, variable) pairs as returned by compute_gradients().?

global_step: Optional Variable to increment by one after the variables have been updated.?

name: Optional name for the returned operation. Default to the name passed to the Optimizer constructor.?

Returns:?

An Operation that applies the specified gradients. If global_step was not None, that operation also increments global_step.

Gating Gradients

Both minimize() and compute_gradients() accept a gate_gradients argument that controls the degree of parallelism during the application of the gradients.

The possible values are: GATE_NONE, GATE_OP, and GATE_GRAPH.

GATE_NONE: Compute and apply gradients in parallel. This provides the maximum parallelism in execution, at the cost of some non-reproducibility in the results. For example the two gradients of matmul depend on the input values: With GATE_NONE one of the gradients could be applied to one of the inputs before the other gradient is computed resulting in non-reproducible results.

GATE_OP: For each Op, make sure all gradients are computed before they are used. This prevents race conditions for Ops that generate gradients for multiple inputs where the gradients depend on the inputs.

GATE_GRAPH: Make sure all gradients for all variables are computed before any one of them is used. This provides the least parallelism but can be useful if you want to process all gradients before applying any of them.

Slots

Some optimizer subclasses, such as MomentumOptimizer and AdagradOptimizer allocate and manage additional variables associated with the variables to train. These are called Slots. Slots have names and you can ask the optimizer for the names of the slots that it uses. Once you have a slot name you can ask the optimizer for the variable it created to hold the slot value.

This can be useful if you want to log debug a training algorithm, report stats about the slots, etc.

tf.train.Optimizer.get_slot_names()

Return a list of the names of slots created by the Optimizer.

See get_slot().

Returns:

A list of strings.

tf.train.Optimizer.get_slot(var, name)

Return a slot named name created for var by the Optimizer.

Some Optimizer subclasses use additional variables. For example Momentum and Adagrad use variables to accumulate updates. This method gives access to these Variable objects if for some reason you need them.

Use get_slot_names() to get the list of slot names created by the Optimizer.

Args:

var: A variable passed to minimize() or apply_gradients().?

name: A string.?

Returns:

The Variable for the slot if it was created, None otherwise.

Other Methods

tf.train.Optimizer.get_name()

这个类是实现梯度下降算法的优化器。

构造函数?

tf.train.GradientDescentOptimizer.(learning_rate, use_locking=False, name=’GradientDescent’)

作用:?

构造一个新的使用梯度下降算法的优化器(optimizer)参数:?

learning_rate:?一个tensor或者浮点值,表示使用的学习率?

use_locking:?If True use locks for update operations.?

name:?【可选】这个操作的名字,默认是”GradientDescent”

实现了 Adadelta算法的优化器,可以算是下面的Adagrad算法改进版本

构造函数:?

tf.train.AdadeltaOptimizer.init(learning_rate=0.001, rho=0.95, epsilon=1e-08, use_locking=False, name=’Adadelta’)

作用:构造一个使用Adadelta算法的优化器?

参数:?

learning_rate:?tensor或者浮点数,学习率?

rho:?tensor或者浮点数. The decay rate.?

epsilon:?A Tensor or a floating point value. A constant epsilon used to better conditioning the grad update.?

use_locking: If True use locks for update operations.?

name:?【可选】这个操作的名字,默认是”Adadelta”

Optimizer that implements the Adagrad algorithm.

See this paper.?

tf.train.AdagradOptimizer.(learning_rate, initial_accumulator_value=0.1, use_locking=False, name=’Adagrad’)

Construct a new Adagrad optimizer.?

Args:

Raises:

The Optimizer base class provides methods to compute gradients for a loss and apply gradients to variables. A collection of subclasses implement classic optimization algorithms such as GradientDescent and Adagrad.

You never instantiate the Optimizer class itself, but instead instantiate one of the subclasses.

Optimizer that implements the Momentum algorithm.

tf.train.MomentumOptimizer.(learning_rate, momentum, use_locking=False, name=’Momentum’, use_nesterov=False)

Construct a new Momentum optimizer.

Args:

learning_rate: A Tensor or a floating point value. The learning rate.?

momentum: A Tensor or a floating point value. The momentum.?

use_locking: If True use locks for update operations.?

name: Optional name prefix for the operations created when applying gradients. Defaults to “Momentum”.

实现了Adam算法的优化器?

构造函数:?

tf.train.AdamOptimizer.(learning_rate=0.001, beta1=0.9, beta2=0.999, epsilon=1e-08, use_locking=False, name=’Adam’)

Construct a new Adam optimizer.

Initialization:

m_0 <- 0 (Initialize initial 1st moment vector)?

v_0 <- 0 (Initialize initial 2nd moment vector)?

t <- 0 (Initialize timestep)?

The update rule for variable with gradient g uses an optimization described at the end of section2 of the paper:

t <- t + 1?

lr_t <- learning_rate * sqrt(1 - beta2^t) / (1 - beta1^t)

m_t <- beta1 * m_{t-1} + (1 - beta1) * g?

v_t <- beta2 * v_{t-1} + (1 - beta2) * g * g?

variable <- variable - lr_t * m_t / (sqrt(v_t) + epsilon)?

The default value of 1e-8 for epsilon might not be a good default in general. For example, when training an Inception network on ImageNet a current good choice is 1.0 or 0.1.

Note that in dense implement of this algorithm, m_t, v_t and variable will update even if g is zero, but in sparse implement, m_t, v_t and variable will not update in iterations g is zero.

Args:

learning_rate: A Tensor or a floating point value. The learning rate.?

beta1: A float value or a constant float tensor. The exponential decay rate for the 1st moment estimates.?

beta2: A float value or a constant float tensor. The exponential decay rate for the 2nd moment estimates.?

epsilon: A small constant for numerical stability.?

use_locking: If True use locks for update operations.?

name: Optional name for the operations created when applying gradients. Defaults to “Adam”.

要是有不知道线性回归的理论知识的,请到?

http://blog.csdn.net/xierhacker/article/details/53257748?

http://blog.csdn.net/xierhacker/article/details/53261008?

熟悉的直接跳过。?

直接上代码:

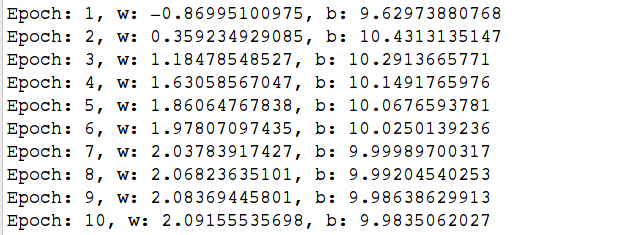

结果:?